Locale magic (literally)

Submitted by Christoph on 9 August, 2009 - 13:42Another programming-centric post and follow-up on Thursday's post about locale issues with Turkish.

So, I showed some Problems with locale-dependant mappings using the case of Turkish, that mapps small Latin character i to uppercase İ, which also has a dot on top. Now join me on some more magic. On Unix you need to have the proper locale generated, which under debian works with dpkg-reconfigure locales.

>>> import locale

>>> locale.setlocale(locale.LC_ALL, 'tr_TR.ISO-8859-9')

'tr_TR.ISO-8859-9'

>>> from cjklib.reading import ReadingFactory

>>> f = ReadingFactory()

>>> 'LIO' == u'LIO'

True

>>> f.isReadingEntity('LIO', 'WadeGiles')

False

>>> f.isReadingEntity(u'LIO', 'WadeGiles')

True

So what are we doing? We set the locale to Turkish, which will interpret strings using rules of the Turkish script. Then we make sure that the plain Python string 'LIO' equals the Unicode string u'LIO' (we can do this under Python 2.x, but Python 3 will lose the original string class). Now comes the fun part. We load cjklib and check if LIO is a valid syllable in the Wade-Giles romanization system. We yield False for the plain string and True for the Unicode string. Wow. What happend?

In case this reminds you of an earlier trick of mine, Unicode - Don't trust your eyes, then don't be mistaken. This time I'm not playing tricks on you.

The WadeGilesOperator that actually handles this request needs to normalize the string first and converts it to lowercase before it will lookup the form in its table. And here is the issue: LIO will be converted to lıo (without dot) under the current locale and this, of course, is not a valid syllable. So I learnt something today: I have to make sure we only work on Unicode objects in cjklib. Hope you, too, learnt something new.

Update: It seems Python in general has a problem with the Turkish locale. cjklib now breaks with Python2.6 under said locale.

Python, Unicode and the digital divide

Submitted by Christoph on 6 August, 2009 - 14:12One could say that Unicode is the reflection of globalization in computing. So, being a computer scientist this huge project very much gets my attention and fascinates me on a daily basis. And Unicode is not just a feature, it is a foundation that bridges between languages and cultures in the digital world.

You might have heard of the digital gap that divides groups with good access to digital solutions and those without. One factor that drives this gap is the fact that technological solutions cannot be easily transferred and implemented throughout the global community. Say for example speech technology. Speech recognition started out for English and then got pushed to French, German, Japanese and now needs to take the next step. This technology comes at great costs and currently needs to be reaccessed for every language in question, having only low synergy effect.

Now, very often these problems are very much practical. Let me introduce you to one I have at hand. First, a short addition to Unicode. Known to most as the universal character encoding, it is more than that, coming with a wide range of language algorithms and solutions. Basic operations like (to-)uppercase/lowercase or titlecase, which I mentioned before.

Upper-/lowercase conversion is a algorithmic problem that for the initial ASCII (the mother of encodings) was pretty easy: add a 32 to the code point of 'A' to receive 'a', same for b to z. But it is not always that easy. German adds umlauts and a sharp-S: ß. Conversion from Fuß ("foot") to upper case results in FUSS which most likely will not change in the near future, though an uppercase sharp-S was added recently.

A more complicated case is Turkish, which next to other additional characters has "two Is", one without dot (ı), one with (i). This is consistently extended to uppercase writing so the former is mapped to I, the latter to İ. While this seems straightforward as other characters, there is a notable crosswise mapping. While I is the uppercase of i in most languages, Turkish is a special case.

How is this fact reflected in Python?

>>> u'I'.lower()

u'i'

This is correct for most languages, but as said before, wrong for Turkish. People have come across this problem, and where told, that Python probably won't ship with a native solution, but most likely will rely on a binding of IBM's ICU library.

Using the PyICU module, you can already solve the issue at hand:

>>> from PyICU import UnicodeString, Locale

>>> trLocale = Locale('tr_TR')

>>> trLocale.getDisplayName(Locale('en'))

u'Turkish (Turkey)'

>>> print unicode(UnicodeString('i').toUpper(trLocale))

İ

Another problem special to Turkish still involving the upper "Is" is posed by the mix of English and Turkish, both having different upper-/lowercase mappings. A tool, even though translated on the user interface, might use an internal mapping of English commands and fail horrendously. So while for example command "QUIT" in English will terminate the program, given as "quit" under a Turkish locale will resolve to "QUİT", which is a different string.

Ever though that basic computing problems were solved in the 1980s?

Now I wonder, how does the Turkish community program in Python nowadays? 8-bit string classes act in a locale-dependent way, while the future Unicode-only strings will lose this behavior.

Another thought: Wouldn't it have been possible to keep the i to I mapping for the Turkish locale and create a "really-no-dot-I" as uppercase equivalent for ı, then letting the font take care of rendering the "big-i-I" with a dot? For the sake of backwards compatibility it for sure was to late to think about this when Unicode was born.

Update: It seems that following PEP 358 the bytes class which subsitutes the plain string class in Python3 ships with isupper() and friends which only work for Latin characters as in plain ASCII, A-Z, but will lose all locale-dependant behaviour.

Wrong Diacritics with Pinyin

Submitted by Christoph on 2 August, 2009 - 16:46Dear lazyweb,

I am looking for examples of bad Pinyin where invalid diacritics are being used.

I have two already for the third tone, but I am curious if other tones also see similar errors.

- Xiàndài Hànyû Dàcídiân (Circumflex)

- Wŏ huì shuō yìdiănr (Breve)

Possibly letter ü experiences similar difficulties, though I'm not speaking of substitutes v, u: and uu.

Colloquial designations of Kangxi radicals

Sorting and indexing English words or those of other languages with roman alphabet is pretty easy, as letters are ordered from A to Z. Chinese characters are much more difficult to handle, as setting up a distinct order for each and every character fails due to the sheer number of characters - there's even no distinguishable upper limit.

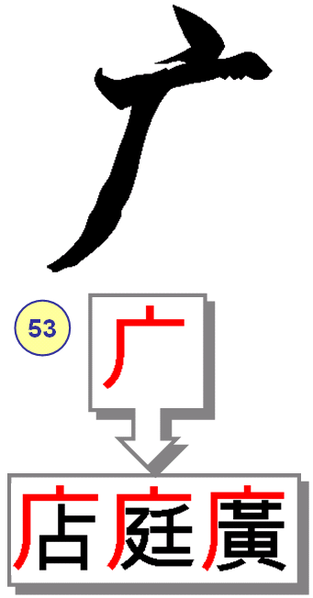

Radical 53 in other characters

Radical 53 in other characters

Radicals can solve the ordering issue. To understand how they work you have to know that Chinese characters (sino-japanese characters, ...) are made of components with its minimal set being around 1000 if I recall correctly (DeFrancis has something on that). Radicals are a special group of those components as they try to (almost) span the whole set (of unknown size) of characters and to some extend are regarded to reflect a semantic aspect of each character. The construct is man-made and thus doesn't reflect an underlying rule of the writing system, so several radical sets exist. The best known set is the group of 214 Kangxi radicals which where used in the Kangxi Dictionary and are still used today, even though there are sets that have been developed later on.

But I want to talk about how those radical forms are called, so allow me to go in medias res.

Some of those radicals are characters themselves, some are only found as components or even as single strokes. While the former can be easily named by there character (e.g. horse) it has proven that distinct names for radical forms are helpful and so for example Herbert A. Giles lists some in his radical table of "A Chinese-English Dictionary" (1912). To give an example 疒 is not used alone as a character but in a character like 病 (bìng, "ill") and is called 病字旁 (bìngzìpáng) literally meaning "character-病 side" as it occurs on the left side of the character for "ill".

I'm looking for a list of those names. One use case will be Eclectus that has a radical table letting you search in several ways, one being the Chinese name of the radical. In the following I'll list the names I found, myself being critical though to what extend it will be possible to setup a fully covering list of those colloquial names.

From "A Chinese-English Dictionary"

This list is from Giles' "A Chinese-English Dictionary" in the second edition from 1912. It is unsure if all those names are still in use today, or if some of them were subject to change in the last nearly 100 years.

| 1 | 一橫 | yīhéng |

| 2 | 一竪 | yīshù |

| 3 | 一點 | yīdiǎn |

| 4 | 一撇 | yīpiě |

| 7 | 兩橫 | liǎnghéng |

| 9 (Variant) | 單立人 | dānlìrén |

| 13 | 三道框 | sāndàokuàng |

| 14 | 禿寶蓋 | tūbǎogài |

| 15 | 兩點水 | liǎngdiǎnshuǐ |

| 18 (Variant) | 立刀 | lìdāo |

| 22 | 三道框 | sāndàokuàng |

| 23 | 三道框 | sāndàokuàng |

| 26 | 硬耳刀 | yìngěrdāo |

| 27 | 禿偏上 | tūpiānshàng |

| 31 | 四道框 | sìdàokuàng |

| 32 | 土堆 | tǔduī |

| 32 (Variant) | 提土 | títǔ |

| 40 | 寳蓋 | bǎogài |

| 45 | 半草 | bàncǎo |

| 47 (Variant) | 三臥人 | sānwòrén |

| 47 (Variant) | 兩臥人 | liǎngwòrén |

| 50 | 大巾旁 | dàjīnpáng |

| 53 | 偏上 | piānshàng |

| 58 | 橫山 | héngshān |

| 59 | 三撇 | sānpiě |

| 60 | 雙立人 | shuānglìrén |

| 61 (Variant) | 竪心 | shùxīn |

| 64 (Variant) | 提手 | tíshǒu |

| 66 | 反文 | fǎnwén |

| 66 (Variant) | 反文 | fǎnwén |

| 85 (Variant) | 三點水 | sāndiǎnshuǐ |

| 86 (Variant) | 四點火 | sìdiǎnhuǒ |

| 93 | 提牛旁 | tíniúpáng |

| 94 (Variant) | 反犬 | fǎnquǎn |

| 94 (Variant) | 犬猶 | quǎnyóu |

| 96 (Variant) | 斜玉 | xiéyù |

| 104 | 病字旁 | bìngzìpáng |

| 108 | 皿堆 | mǐnduī |

| 115 | 禾木 | hémù |

| 116 | 穴字頭 | xuèzìtóu |

| 118 | 竹字頭 | zhúzìtóu |

| 120 | 絞絲 | jiǎosī |

| 122 (Variant) | 扁四 | biǎnsì |

| 130 (Variant) | 肉字旁 | ròuzìpáng |

| 140 (Variant) | 草字頭 | cǎozìtóu |

| 143 | 血堆 | xiěduī |

| 146 | 四字部 | sìzìbù |

| 154 | 具貝邊 | jùbèibiān |

| 157 | 足路 | zúlù |

| 162 (Variant) | 走之 | zǒuzhī |

| 163 (Variant) | 輭耳刀 | ruǎněrdāo |

| 163 (Variant) | 右耳刀 | yòuěrdāo |

| 170 (Variant) | 左耳刀 | zuǒěrdāo |

| 173 | 兩字頭 | liǎngzìtóu |

Others from German Wikipedia

This is a list of other names from the radical articles of the German Wikipedia. Some are just slight abbreviations compared to the upper table, still being included here though.

| 8 | 頭 | tóu |

| 9 (Variant) | 單人旁 | dānrénpáng |

| 12 (Variant) | 倒八字 | dàobāzì |

| 12 (Variant) | 羊角 | yángjiǎo |

| 13 | 上三框 | shàngsānkuàng |

| 14 | 平寶蓋 | píngbǎogài |

| 15 | 冰 | bīng |

| 17 | 下三框 | xiàsānkuàng |

| 18 (Variant) | 立刀旁 | lìdāopáng |

| 20 | 包字頭 | bāozìtóu |

| 22 | 左三框 | zuǒsānkuàng |

| 22 | 區字框 | qūzìkuàng |

| 26 | 單耳旁 | dāněrpáng |

| 31 | 圍 | wéi |

| 31 | 圍字框 | wéizìkuāng |

| 31 | 口字框 | kǒuzìkuāng |

| 31 | 大口框 | dàkǒukuāng |

| 60 | 雙人旁 | shuāngrénpáng |

| 64 (Variant) | 提手旁 | tíshǒupáng |

| 75 | 木字旁 | mùzìpáng |

| 81 | 比較 | bǐjiào |

| 82 | 毛筆 | máobǐ |

| 86 (Variant) | 四點 | sìdiǎn |

| 87 (Variant) | 爪部 | zhuǎbù |

| 93 (Variant) | 牛字旁 | niúzìpáng |

| 94 (Variant) | 反犬旁 | fǎnquǎnpáng |

| 94 (Variant) | 犬部 | quǎnbù |

| 96 (Variant) | 王字旁 | wángzǐpáng |

| 115 | 禾木旁 | hémùpáng |

| 120 (Variant) | 絞絲旁 | jiǎosīpáng |

| 122 (Variant) | 四字頭 | sìzìtóu |

| 129 | 毛筆 | máobǐ |

| 141 | 虎 | hǔ |

| 149 (Variant) | 言字旁 | yánzìpáng |

| 157 (Variant) | 足字旁 | zúzìpáng |

| 162 (Variant) | 走字旁 | zǒuzìpáng |

| 162 (Variant) | 建字旁 | jiànzìpáng |

| 163 (Variant) | 右耳朵 | yòuěrduo |

| 167 (Variant) | 金字旁 | jīnzìpáng |

| 170 (Variant) | 耳刀 | ěrdāo |

| 184 (Variant) | 食字旁 | shízìpáng |

I'll continue to collect colloquial names under Eclectus' svn, so check there for updates.

Coming from a workshop

Submitted by Christoph on 29 July, 2009 - 00:09I'm on the train back from a workshop on Japanese and computing. For me it was something new meeting these people. I got a very warm welcome and had some nice conversations.

Hearing about problems and issues other people have with related work lets you know you're not alone and talking about solutions helps with new insights. For example we talked about technical limitations in SQL with Unicode characters outside the BMP (not possible in MySQL 5.0), collation issues, Java's inability to properly render Devanagari (or was it some other Indian writing), and in general encoding issues.

In the workshop was the creator of WadokuJT, and from his, in my terms, long experience on the field of dictionaries it was interesting to get some insights. There seems to be nearly no project funding and most energy seems to stem from private investment. While the creation was initiated by the lack of proper electronic dictionaries, some years later now the dictionary is widely used across scientists of Japanese Studies. The creator's main concerns are quality, as dictionaries unlike most Wikis have a high demand on the contributor's (translation) abilities, and thus the Wiki's community based approach isn't as equally valid here.

I had to learn that over time you will come across plenty of people with new and noteworthy ideas, only to see that many of them lack the proper undertaking and breath to carry through with their ideas. It seems that a greater level of persistence is asked from the online folks. Especially it's not missing ideas, it's the lack of people that are willing to carry through with a task that maybe only in the long run will yield satisfying results.

Judging by the discussions it seems there is a lack of qualified people good on both computer science and Japanese/other languages, and research still needs to catch up with technological development. Studying both a linguistic topic for one and as a second major computer science does not seem widely implemented, at least in Germany. People in research are either linguists turned geeks or computer scientists turned language lovers. A nice project on online collaboration in translation introduced in the workshop gave a nice idea of a linguistic programming project. People fluent in both natural and programming languages seem to be highly welcomed!

Sadly enough, I had to learn that Japanese don't do Chinese and Chinese don't do Japanese. While we agreed that the single East Asian studies could benefit widely from each other in the computing field, only few co-operations are seen. The researchers in Buddhism are the only ones to have mastered the language bridge in this area.

It was interesting to see though, that also here Copyright or the lack of proper judging on those cases is an issue, as often enough for dictionaries and other collections it is unclear on which scale Copyright laws hold. The data in general or more common the lack of it is a big topic and mostly needs two things, funding and willingness to share.

Overall it was an interesting debate and everybody left with new ideas and impressions. It's nice to meet people in person and for me very much increases the quality of debate.

Update: And I definitely need a Mac in this community :)