Bootstrapping Tegaki handwriting models using character decomposition

Just yesterday, I committed a new list of character decompositions to cjklib, that was gratefully released under LGPL by Gavin Grover. While until now the about 500 entries served more as a proof of concept, we now have more than 20.000 decompositions spanning the most important characters as encoded by Unicode.

So I wanted to do something nice with this new set of data. I picked the Tegaki project which offers handwriting recognition for Kanji and Hanzi, the latter for Simplified Chinese. I remember showing off the Qt widget I developed to a friend, who then promptly drew a Traditional Chinese character that couldn't be recognized. That was of course because Tegaki (and back then Tomoe) doesn't support Traditional Chinese. Until now.

The problem

So Tegaki organizes its handwriting sets by character encoding sets (JIS for Japanese, GB2312 for Simplified Chinese). For Traditional Chinese we then need to cover BIG5, which, oh horror, comes with 13063 characters, while GB2312 with 6763 entries has only half the size. Well, we could of course copy a lot of models from GB2312, as the simplification process in the 1950s didn't simplify all characters in use, thus leaving the two sets with a good number of shared characters. We could even join in the Japanese set.

But that won't be enough. As GB2312 and the Japanese are only half the size, this surely will leave enough characters unsupported. So now let me bring in the new decomposition data. What if we just build together our models like small Lego bricks?

The solution

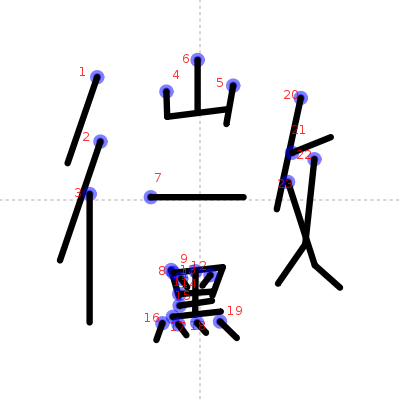

Take for example character 黴: It can be described by the Ideographic Description Sequence (IDS) ⿲彳⿳山一黑攵, or graphically:

山 彳一攵 黑

So all we need to do is, scale the components' models and merge them together. This is what I've done in my local copy of Tegaki at Github.

There's a new script tegaki-bootstrap and it basically just does the simple step described above: It merges the component models given a set of rules, and then gives some output of what is missing:

$ python tegaki-bootstrap --domain=BIG5 --locale=T --max-samples=1 \ handwriting-zh_TW.xml -d ./xml \ -t ../tegaki-zinnia-simplified-chinese/handwriting-zh_CN.xml \ -t ../tegaki-zinnia-japanese/handwriting-ja.xml ................................................................................ .................................... Exact transformation count: 6566 (50%) Decomposition transformation count: 5157 (39%) Decomposition fertility: 1 Missing transformations: 1340 (10%) Missing single characters: 丏丮丳偋偯傜傝傞僯儐儑儤儴儵儹內冇剆剺劗勷匴卄卌卬厴吳唚喡嗀嗂嗩嘂 嘾嚐嚗嚚嚳囋囥囧囪圁圇圌圔圚圛圞垕垹埐堶壆壨壴夒夗奫奭奱婖媱媺嫈嫨嬂 嬮嬼嬽孷孿宧寋尐峊峹嵕嶜嶨嶭嶯 嶴巂巑巕巟巰巿帣廄廌弮彧忀悹惾愋愮慲懪懰懹戶扥抮挀 掁搵摥擊擿攇敹敻斄斲斻斿旂旍旐旓旚旝旞旟暪暵曫朡 柂栔梫棖棦棩椼楥榖榣榦樠樦樴橀橆 橚檕檟櫏櫜欀欳欴歅歞歲毌毚毻毾氂氳氶沀沴泲洍洯淂渀溠溦溫溼滎滘漡漦 潚澦澩濈濦瀗瀪 瀼瀿焂熅熒熜熯熽燢爂爙牚牬牶犕犛犦狦猘猣猺獡獽獿玁玈玊琁璊璿瓖瓥瓽甇甪癟癥癵眕眽 瞉 矎矕矙礐祣祲禋禐禜禢禸稯竮竷竻笀笁笅笉笎笐笒笓笚笝笢笣笭笯笰笲笴笻筀筄筊筎筡筣 筤筦筩筭筳筶筸箂箄箈 箊箌箎箑箖箛箠箤箯箵箷箹箾篊篎篔篕篘篛篜篞篟篢篣篨篫篰篲篴篸 篹篻篽篿簁簂簃簅簆簉簊簎簐簙簜簞簠簢簥 簨簩簬簭簰簳簹簻簼籅籇籈籉籊籓籗籙籚籛籜籣 籦籧籩籪籫籮籯籲粵紾絁絜絭綁綅縈縕繌繡繫纕罃罊羋羕羖羛羜 羠羢羥羦羬羭羱羳羵羷羺羻 翏翛翪胊胏胐胣胾脁膍膧膱臝臦臮臷臿舋舝艐艖虒虩蛓蛗蛪蜁蜵蝆蝬蝯螒螜螣螤螶蟗 蟙蟳蟿 蠜蠠蠤蠫蠯蠰蠽蠿衋衎衕衖衚袌袘袬袲裊裗裛裫褁褎褑褟褢褭褮褱褼襮覂覛覮覶觠觢觰觲觷 諲諼謍讟豋 豖豰賡賮賸賾趀趶趷趹跁跅跇跈跍跐跓跕跘跙跜跠跢跦跧跩跮跰跱跲跴踀踃踄踇 踍踑踒踓踕踖踗踘踙踚踛踜踠踡 踤踥踦踧踫踳踶踸踼踾踿蹅蹍蹎蹓蹖蹗蹚蹛蹜蹝蹞蹡蹥蹧蹪 蹳蹸蹺蹻躂躆躈躌躎躒躕躖躘躚躝躟躣躤躥躦躨躩轀 轚辦郻鄇鄡酨醝醞醟鉓鋟錛鍐鍰鎀鎃鎈 鎉鎞鎣鏽鑤鑲閞闉闒雗雟霵霺鞗韅韏頖頧頯顢颻饜駗騣驌驖髊鬌鬗鬤鬷鬺 鯈鯗鯬鰨鰩鰴鱐鱟 鱨鴘鴭鵖鵩鵹鶢鶱鶾鷅鷇鷫鷰鸁鸔鹺麍黫鼘齤齹兀嗀刱絔髒霥韰虈弚蔾耇媐 Missing components: 58 Missing in-domain components: 卄 (頀蘄薉莍蔎莝蔞茢蘤芧蔮蘴荂藙葨蕮蓳棻艼玂嚄爇蔉 薎蔙蒘蘟薞 礡萣蔩蒨萳芼薾葃菂荍藎藞菢蓨苬薃蠆蔈莗薣莧蒩蔨蘪儰薳蘺藃荌菗葞藣菧蓩苭 蓹蕸葾萉莌薘蒞蔣芢蘥蒮蘵茷萹 薸苂韄藈蓎蕓藘藨寬苲藸菼蓾莁蔊蒏薕蘘芛茦蔪薵萴藅葄蓏 菑葔荖濩蓯葴荶芀芐薚芠蘣蔥蒤莦萯蘳萿荁苀虃蕅菆 臒蓔雘藚葟蕥薧苰蕵菶茀躉莋萊薟蘞茠 蔤蘮萺薿蘾蓅蕄菋鑊蕔臗菛藟藯蘉蔏菬莐蘙蒚蒪蔯薴芶蘹葅藄荋髖葥蓪苶 蓺薁蘌萐莕蔖蒛蘜 薡莥蒫茪蘬薱萰葀蕆虌藑葐菕蓛藡葠蕦蓫藱葰菵蕶蓻荺薆薖萛蒠茥萫蔱蒰檴薶萻蓀虇藆葋蕑 蕡藦蕱遳藶菺芅薋莏蒑蔠茤莯蒱蔰蘲芵萶莿瓁荄藋葖蕠藫蓱苵荴菿蒆蔋莔芚薠樥莤蒶芺茿藀 菄蕛菤蕫苪藰蓶荿蘀 芃蔂薍芓蔒莙薝茞蘠芣蒧薽虀苃蓇菉葌荎蓗菙蕢蓧藭矱蓷藽荾薂蔍茙蘛 蔝躠薢茩蒬莮萷蘻藂葇虋蕍蓌藒蕝菞藢葧 苨菮藲菾莃苖蒍萒蒝蔜莣鶧蘦葝萲蘶菃葂藇虆荈葒 藗櫙菣擭蕬菳蓽蕼蘁蔇莈薌芞蘡蒢蔧萭蘱鄿蓂蕇囆菈葍荓蓒鑝 蕧菨藬蓲葽苾), 翏 (雡憀豂轇僇璆蟉穋嫪嘐飂磟漻嵺鄝顟鷚), 歲 (濊檅噦獩顪劌鐬薉饖翽), 毚 (酁欃艬鑱 儳嚵劖攙瀺饞), 虒 (禠磃傂擨歋鷈謕螔鼶榹), 巂 (酅纗驨欈蠵鑴瓗孈觿), 敻 (藑讂觼), 豖 (諑椓剢), 臿 (偛喢鍤), 壴 (尌壾), 臦 (臩燛), 牚 (撐橕), 吳 (俁娛), 褱 (櫰瀤), 夗 (妴駌), 舋 (亹斖), 縈 (瀠礯), 巿 (伂), 絭 (潫), 繫 (蘻), 囧 (莔), 絜 (緳), 滎 (濴), 裊 (嬝), 禸 (樆), 氶 (巹), 儵 (虪), 袲 (橠), 丮 (谻), 熒 (藀), 筄 (艞), 奭 (襫) Missing out-domain components: 罒 (斁嚃鸅羉蠌瞏薎嬛瘝檡禤嶧罝蘮瀱蠉醳澴罞墿眾懁潀彋襗轘罛遝 罜鱞燡罣罥闤獧罦睪罭罬繯噮奰罳罶罻罺罽罼罿翾), 飠 (餀餂餇餑餕餗餖餛餚餟餧餩餫餪餭餯餱餰餳餲餵餺餼 餿饁饃饇饈饎饓饖饙饘饛饟饞饡飣飥飪飶飹), 夋 (痠稄脧踆焌畯鋑餕鵔捘晙朘荾), 叚 (騢徦煆豭犌碬貑猳椵 赮婽麚), 乚 (圠亃踂亄錓癿乿釓耴鮿唴), 尞 (簝橑飉嫽膫憭轑嶚镽蟟), 氺 (忁綠淥錄彔潻剝桼), 厷 (翃 汯閎竑吰谹鈜耾), 臽 (燄淊蜭錎埳欿窞), 爫 (偁檃脟哷蛶鋝乿), 菐 (墣襆瞨轐鏷獛), 屰 (蟨遻瘚蝷鷢), 巤 (儠犣蠟擸), 啇 (墑樀甋蹢), 镸 (镻镺镽镼), 隺 (搉傕篧蒮), 殸 (漀毊鏧韾), 畺 (韁麠殭), 夰 (奡臩昦), 丩 (朻觓虯), 辥 (櫱糱蠥), 卂 (籸鳵阠), 丂 (甹梬涄), 卝 (雈茍), 夨 (捑奊), 夅 (舽袶)

So you can see, 50% of characters are already covered by direct matches of characters from the Simplified Chinese and Japanese set. And then the in my opinion impressive number of 39% coverage by component models. So right now there are "only" 1340 characters left, that need extra handwriting data. Not really though: The script additionally analyses components with high productivity. If you supply a handwriting model of 卄, you'll most probably increase the set of covered charaters by more than 300. And by providing some characters that are not in the set itself, you can even increase the number manifold. And another small bonus: cjklib's component data is still not fully covered. You can see some characters handled as "single characters", that clearly have a component structure. Adding 10 entries here will make the number of missing characters drop even further.

How well does it work?

So, does this really work? Components have different sizes, and merging will generally never yield the correct proportions. So I ran the resulting model against a small set of characters I drew by hand. This set only includes characters from the 39% range, i.e. characters we use the "component approach" with:

$ tegaki-eval -d xml_test/ zinnia "Traditional Chinese"

Overall results

Number of characters evaluated: 20

match1

Accuracy/Recall: 70.00

Precision: 70.00

F1 score: 70.00

match5

Accuracy/Recall: 75.00

match10

Accuracy/Recall: 85.00

I think that is pretty well, considering my awful handwriting ;)

There a still more things to be done, like improving the merging-algorithm to better integrate the component's bounding box, or handling bootsrapping sources by locale: In some cases Japanese stroke order is different to the Chinese one, so we need to supply own data here. Cjklib has a framework to provide this kind of information. Now we only need to supply it with more data!

I got this error

hi,

i try python tegaki-bootstrap --domain=BIG5 --locale=T --max-samples=1 \

handwriting-zh_TW.xml -d ./xml \

-t ../tegaki-zinnia-simplified-chinese/handwriting-zh_CN.xml \

-t ../tegaki-zinnia-japanese/handwriting-ja.xml

get the error

Traceback (most recent call last):

File "tegaki-bootstrap", line 64, in

from tegakitools.charcol import *

File "/home/cthung/hwr/tegaki-tools/src/tegakitools/charcol.py", line 25, in

from tegaki.charcol import CharacterCollection

ImportError: No module named charcol

please help me ,thanks !

Mixed issues

Hi Cheng-Ta, I assume you might have two issues here:

It seems Tegaki changed the API and with "charcol" a new module has been created. I needed to change tegaki-bootstrap to follow the latest changes:

diff --git a/tegaki-tools/src/tegaki-bootstrap b/tegaki-tools/src/tegaki-bootstrap

index bf15032..6b92647 100755

--- a/tegaki-tools/src/tegaki-bootstrap

+++ b/tegaki-tools/src/tegaki-bootstrap

@@ -59,7 +59,8 @@ import random

random.seed(12345) # provide deterministic results

from optparse import OptionParser

-from tegaki.character import CharacterCollection, Writing, Character

+from tegaki.character import Writing, Character

+from tegaki.charcol import CharacterCollection

from tegakitools.charcol import *

In addition, as your error message is different to mine, I assume you have an older version of Tegaki in the background (installed through package management?). The source you downloaded doesn't find the newer modules. Either you install the newer version (python setup.py install), or (under Unix) you tell Python where to look for the newest modules: "export PYTHONPATH=$PYTHONPATH:/home/cthung/hwr/tegaki-python/".

I suggest that you come to http://groups.google.com/group/tegaki-hwr?hl=en so we can better solver your issue.